Jeg brugte kunstig intelligens til at lave referat fra #NAMS25, og så skete dette… (er det ikke sådan, man skriver en rubrik i dag?)

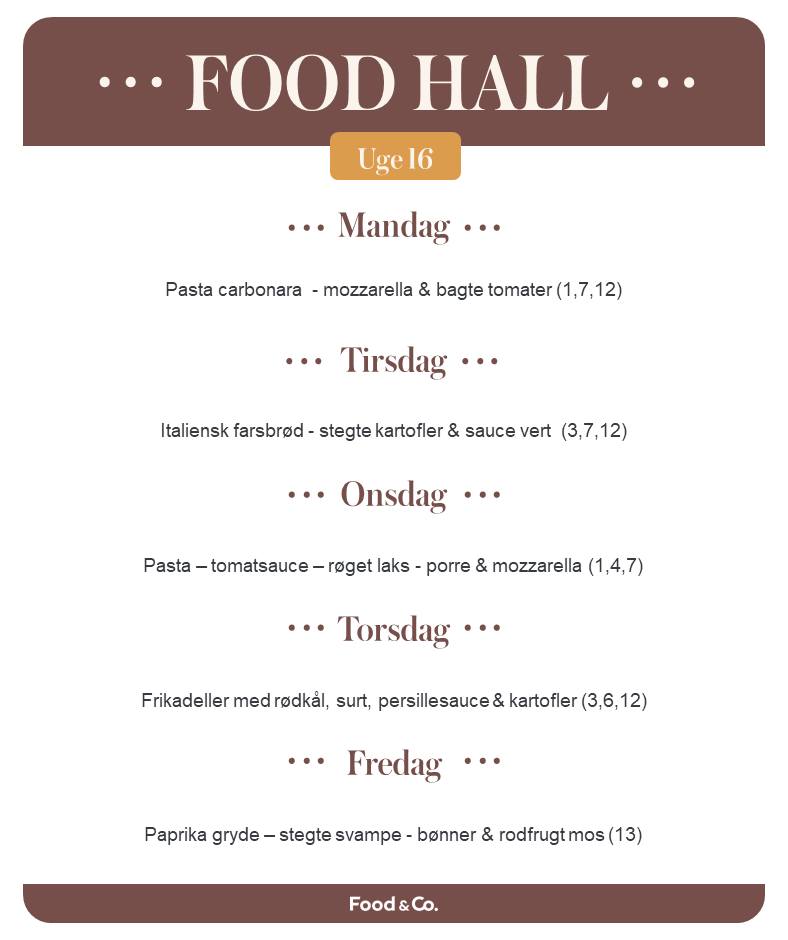

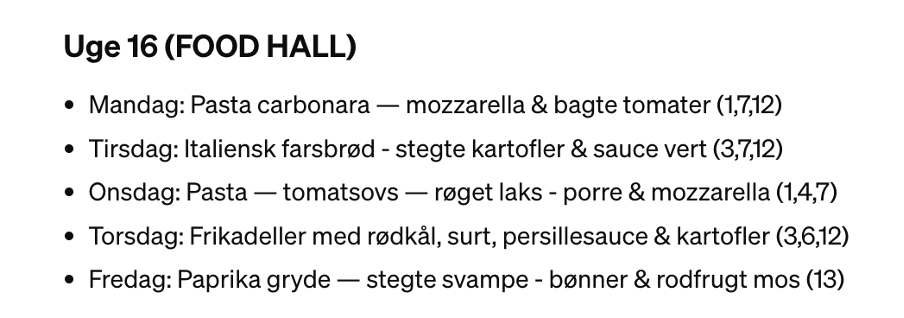

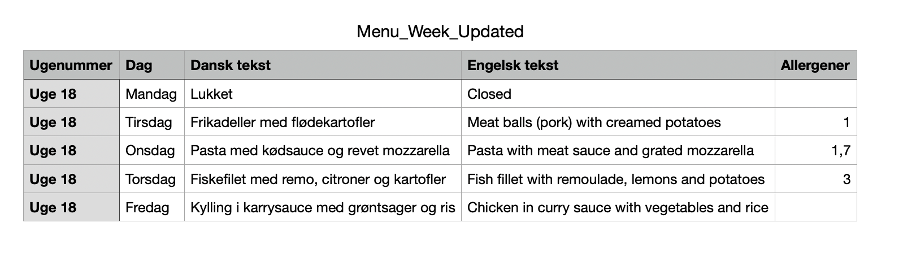

TL;DR-versionen: ChatGPT var bedst til handouts (opsummerende noter), særligt på engelsk; de var klodsede på dansk.

Pinpoint og Notebook.lm ville kræve, at jeg arbejdede en del med promptet – det blev let banalt. Men jeg elsker, at man begge steder kan se, hvor i transskriberingen den henter hovedpointerne (ChatGPT påstår, den kan, men det sejler en smule). Det sjoveste var klart at lave en GPT ud af 20 af transskriberingerne (som er maksantallet af dokumenter, en GPT hos ChatGPT kan indeholde). Jeg bad den om at lave en AI-strategi-GPT ud af dokumenterne, der kunne rådgive mig. Efter en ordentlig røffel(!) kunne jeg endda få den til at give mig præcise citater fra dokumenterne.

Jeg testede også ChatGPTs Canva-GPT-integration, og den laver fine forslag til slide-design, men sætter ikke selv teksten ind – det skal man gøre manuelt. Prezi har til gengæld lavet en AI-funktion, som med udgangspunkt i fx et handout kan lave slides – design og tekst. Og det ville kunne bruges med meget få rettelser.

Den længere version: Den oprindelige aftale var: Du har 8 minutter og 10 slides til at fortælle os ”noget om kunstig intelligens” på alle medarbejdermøde. Det er sjældent, jeg overholder rammen, fordi jeg altid bliver for begejstret og synes, der er 200 ting, mine kolleger bør se og vide.For at få mest muligt ud af opgaven har jeg som mål, at jeg skal bruge forberedelsen til at teste nye værktøjer.

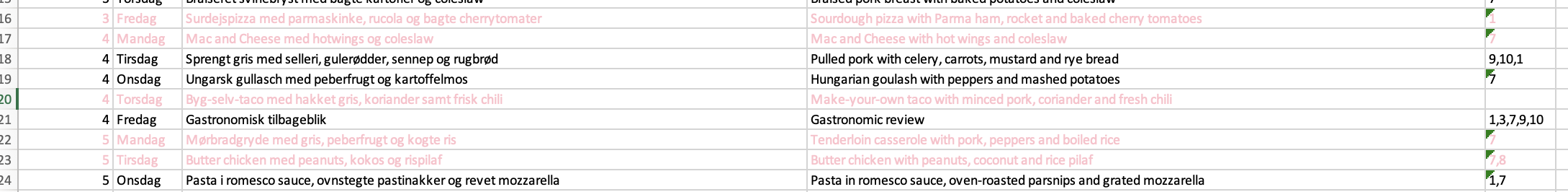

I tirsdags havde Peter spurgt, om jeg ikke ville fortælle lidt om den nyligt overståede Nordic AI Journalism in Media Summit – NAMS (konferencen kan I læse meget mere om hos andre gode mennesker – dette kommer til at handle om værktøj): Jeg havde taget noter i Note-app’en, som de fleste af os gør til konferencer: tager lidt billeder af slides, noterer lidt tilfældige tanker a la ”jeg kan godt lide begrebet vibe-coding”. Jeg havde også delt dokumentet med en anden deltager, der løbende skrev lidt kommentarer. Og for få år siden ville jeg have lavet mit oplæg med udgangspunkt i de noter – og min ikke altid lige skarpe hukommelse.

I stedet testede jeg følgende: Jeg startede med at finde alle oplæggene på YouTube: Nordic AI in Media Summit (hurra for, at de så hurtigt er online).

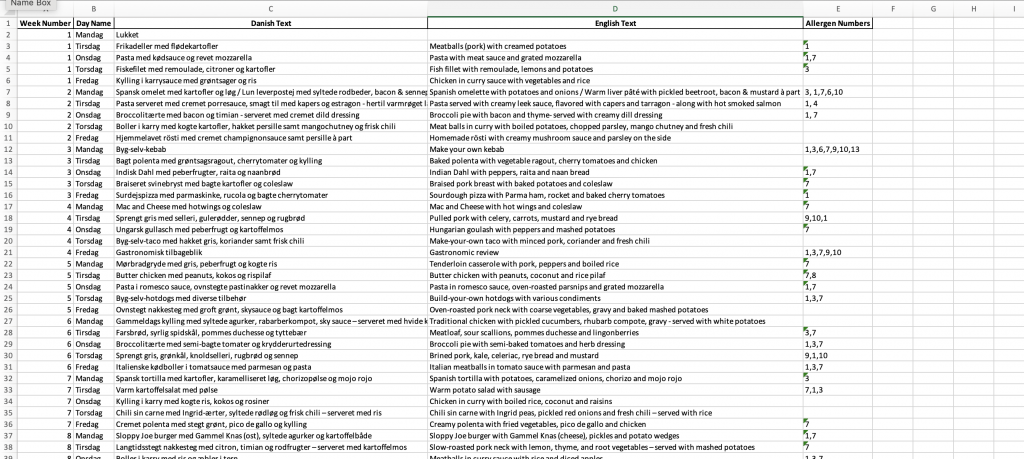

Og så brugte jeg Notegpt.io til at transskribere alle oplæggene. Det tog mellem 3 og 10 sekunder pr. oplæg. Transskriberingen rodede lidt rundt i navne osv., men ikke noget, der var et problem i forhold til det, jeg havde brug for.

Opsummeringer og handouts

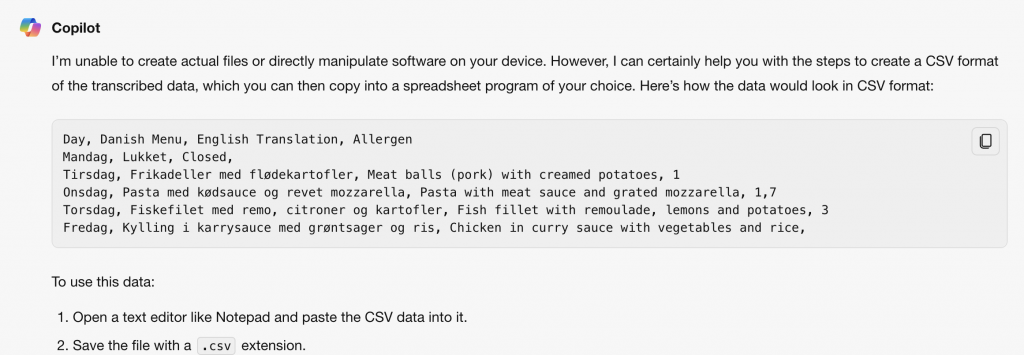

Det første, jeg gjorde, var at give ChatGPT et af referaterne og bede den om hovedpointerne. Generelt blev det udmærket – den ramte ret præcist, når den blot skulle lave et enkelt handout. Der var dog tydelig forskel på dansk og engelsk. Dansk var generelt ret kluntet (jeg brugte 4o).

Automatiserede slides

Men livet er for kort til at lave slides, og ChatGPT har fået en Canva-integration (hvis man går op under GPT’er, kan man vælge Canva). Her tog jeg det handout, jeg havde genereret, og bad den lave det til slides. Den foreslog derefter et par designs, man kunne vælge imellem. De var temmelig generiske, men holdt sig til temaet og kunne sagtens bruges som udgangspunkt. Jeg skulle dog selv sætte teksten ind og redigere den, så den passede til formatet osv. … det føltes lidt som spild af tid.

Derfor søgte jeg på Google efter ”generate AI slides” – og blev sendt over til gode gamle Prezi, der også har fået en AI-udgave. Her fodrede jeg den med det handout, ChatGPT havde lavet – og så fiksede den slides, tekst, layout osv. Det hele blev en smule generisk, men jeg skulle ikke rette meget, før jeg reelt havde et oplæg, der ville matche det, man ser på mange konferencer. Alt sammen meget godt – men jeg havde jo brug for en generel opsummering af alle oplæggene.

Pinpoint, Notebook, GPT

Derfor prøvede jeg i stedet at smide alle referater ind i først Notebook.lm og Google Pinpoint (hvor jeg har slået AI-integrationen til). Hos Notebook.lm var jeg nødt til at lave dokumenterne til pdf’er, fordi den ikke spiser Word-dokumenter. Suk. Resultatet var ret ens de to steder. Der er fordele og ulemper ved de indsigter, de har automatiseret: nævnte personer (Donald Trump var den mest nævnte), institutioner osv. (lidt rodet, fordi jeg ikke havde rettet transskriberingen igennem). Notebook.lm’s mind map gav en sjov indsigt. Generelt synes jeg, Notebook.lm’s opsummeringer (Briefing doc, Study Guide, FAQ) er ret skarpe. Jeg tror reelt, jeg kunne have fået nogle gode idéer ved at lege videre med begges funktioner, men det krævede ekstraarbejde.

Så jeg vendte blikket tilbage mod ChatGPT for at se, hvad jeg ville kunne få ud af at lave en GPT med transskriberingerne. Jeg bad den lave en GPT, der kunne rådgive mig om integrering af kunstig intelligens i medievirksomheder. Generelt var svarene gode – og meget tro mod det, der blev sagt på konferencen. Det vil til gengæld også sige, at den ikke kan svare spekulativt; den kan svare konkret med udgangspunkt i det, der blev sagt. Da jeg bad den om at underbygge det, den skrev, med citater fra de dokumenter, jeg havde fodret den med, startede den med at give mig generiske, falske citater (jeg krydstjekkede selvfølgelig). Men efter en kort opsang lykkedes det at få de citater, jeg havde brug for – selvom den ikke formåede at linke dem tilbage til dokumenterne (den lavede døde links).

Hvordan ville jeg bruge alt dette som journalist

Jeg ville næppe bruge GPT’en til at lave journalistiske referater eller, for den sags skyld, til at lave mit oplæg for kollegerne. Men der var noget ret tilfredsstillende i fx at kunne spørge den:

”Vi står over for at skulle lave en reform af vores bacheloruddannelse – hvordan integrerer vi bedst kunstig intelligens? Hvad er muligheder, og hvad er risici?” – og reelt få nogle gode svar, der tog udgangspunkt i de begavede menneskers oplæg på konferencen. I mit job ville dette nok reelt være det mest brugbare. Hvis jeg var journalist, der skulle dække konferencen, ville jeg nok lave en optagelse, mens jeg selv lyttede og skrev citater ned. Og så ville jeg få optagelsen transskriberet og bede ChatGPT lave en kort artikel efter hvert oplæg (jeg ville give den vinklen og formatet). Den artikel ville jeg skrive igennem og tilføje mine egne citater. På den måde ville jeg kunne producere hurtigere – og reelt nok også bedre – end jeg selv ville formå uden redskaber.

De genererede slides fra Canva og Prezi:

Jeg kunne godt finde på at bruge Canva, fordi de reelt laver flottere slides, end jeg selv gør, men det ville ikke lette mit arbejde. Prezi? Hmm. Det bliver meget Prezi-agtigt (og et abonnement koster 15 dollars), men jeg var faktisk imponeret over indholdet. Det bliver dog let lidt generisk, men man kan let rette i dem, så de kan være et okay udgangspunkt.

Hvad så nu? Hvad er det næste, jeg ville teste?

En GPT kan kun rumme 20 dokumenter – modsat Pinpoint og Notebook.lm – men jeg tror, jeg ville prøve at tilføje mine egne noter og billeder af slides alle tre steder og se, om det ville løfte indholdet. Jeg skriver af og til oplæg, strategier, fondsansøgninger, den slags dokumenter, og jeg ville uden tvivl kunne få nye idéer og formuleringer ved at pingponge med referaterne fra konferencen – både hos Pinpoint, Notebook.lm og ChatGPT.

Det er tydeligt, at det gamle ”Shit in, shit out”-begreb også gælder her. Hvis du fodrer den med overfladiske strategi-statements, så er det også det, du får tilbage i hovedet. Og hvis du skriver dårlige, upræcise prompts, så bliver dine svar upræcise.

Der skulle dog ikke mange justeringer til, før alle tre tjenester gav mig reel værdi.